We don’t really notice it, because ChatGPT, Claude, or Gemini are free or available for a few dozen euros per month. Yet behind this friendly façade lies a completely disproportionate economy: running these models costs a fortune.

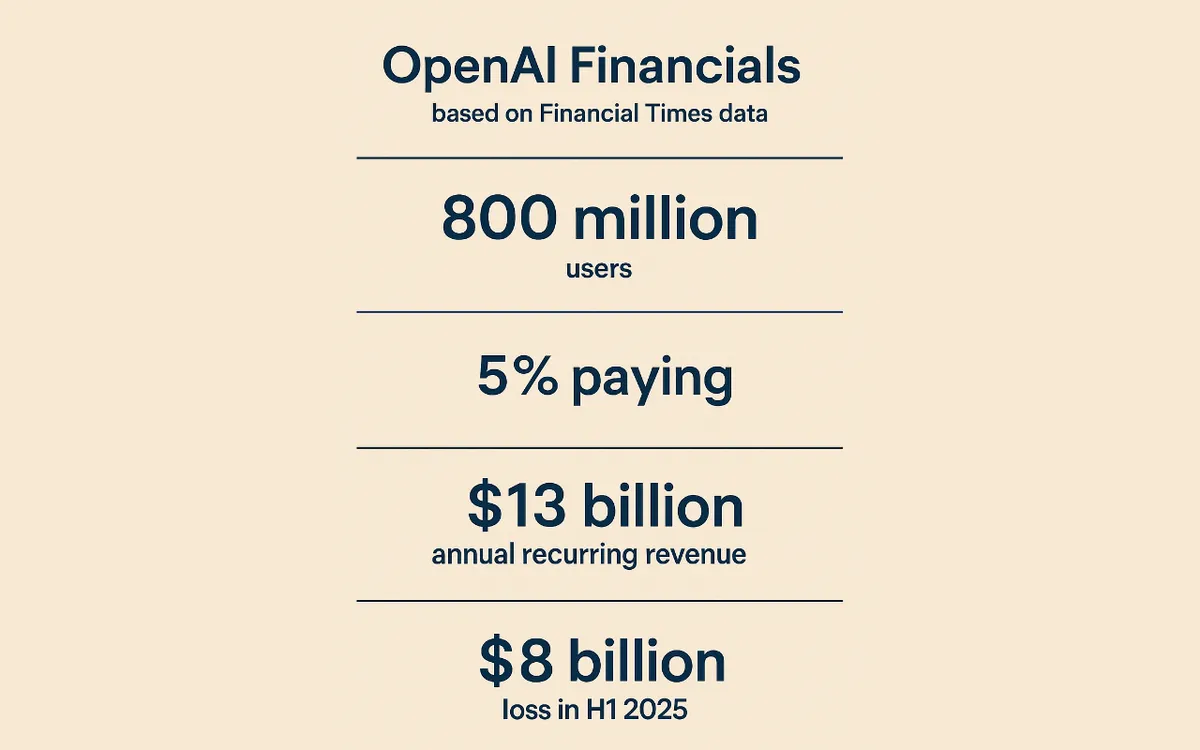

The numbers recently published by the Financial Times about OpenAI are striking.

The company has over 800 million users, but only 5% pay the $20 monthly subscription. That represents around $13 billion in annual recurring revenue, 70% of which comes from these subscriptions and 30% from APIs and pro offerings. And despite that, OpenAI is losing $8 billion in the first half of 2025. In other words, for every dollar earned, they spend two.

This situation highlights a simple fact: generative AI only stands because it’s propped up by venture capital. The models aren’t profitable. They’re technological showcases funded by investors who believe that by burning enough cash today, they’re buying the future of intelligent computing tomorrow. OpenAI has already made colossal commitments (over one trillion dollars over ten years) to purchase computing capacity from Oracle, Nvidia, AMD, and Broadcom, which will require the energy equivalent of twenty nuclear reactors.

So I hear a lot of developers complain about the prices of tools like Cursor or Claude Code, but those costs reflect the physical reality of the problem. Each request travels across kilometers of servers, engages insanely expensive GPUs, and consumes a significant amount of energy.

The real cost of AI is its infrastructure.

Until the cost of computation collapses, the business model of AI will remain on life support. And that’s where, in my opinion, the next breakthrough lies: Small Language Models (SLMs). Smaller, specialized models capable of running locally or on standard hardware. Less power, less energy, less dependency on data centers.

At the same time, consumer hardware will evolve as well: computers and smartphones will increasingly integrate AI-dedicated cores, like AMD’s AI Max chips or Apple’s M-series, which already embed specialized compute units.

Personally, I decided to invest in an AMD AI Max 395+ chip with 128GB of LPDDR5. I’ll talk about it soon to show what it can do.

Source of figures: Financial Times.

Valérian de Thézan de Gaussan

Valérian de Thézan de Gaussan