I’ve been benchmarking my Framework Desktop, which contains an AMD Ryzen AI Max+ 395 (Strix Halo) with 128GB of LPDDR5 unified memory, and it’s a great reality check on where local AI actually stands. Yes, this class of “AI PC” can run small models effortlessly and even push into the medium tier with decent results. But the moment you look at what it takes to match flagship cloud models, you hit a hard wall: these systems are designed around a ~128GB memory ceiling. That’s enough to load and run medium models, sometimes surprisingly well, but it’s not even close to what frontier dense models require.

Let me unpack that.

1. We now have three real tiers of models

Right now the ecosystem naturally splits into:

-

Small models (a few billion params) Example: Llama 3.x 3B class models. These run on almost any decent laptop. They’re cheap, fast, and useful — but limited.

-

Medium models (~70B dense or ~100B+ MoE) Example: Llama 3.x 70B, or GPT-OSS-120B. These are much more capable. But they need serious memory. GPT-OSS-120B is Mixture-of-Experts, so it only activates ~5.1B params per token, which is why you can sometimes run it around ~60GB RAM/VRAM with heavy quantization.

-

Large / flagship frontier models Claude, Gemini, top-tier ChatGPT, etc. These are the ones that feel “unfairly good” at broad reasoning, coding, writing, agentic tasks. They’re still datacenter beasts.

That tiering matters because hardware maps onto it directly.

2. Local AI hardware is hitting a hard ceiling

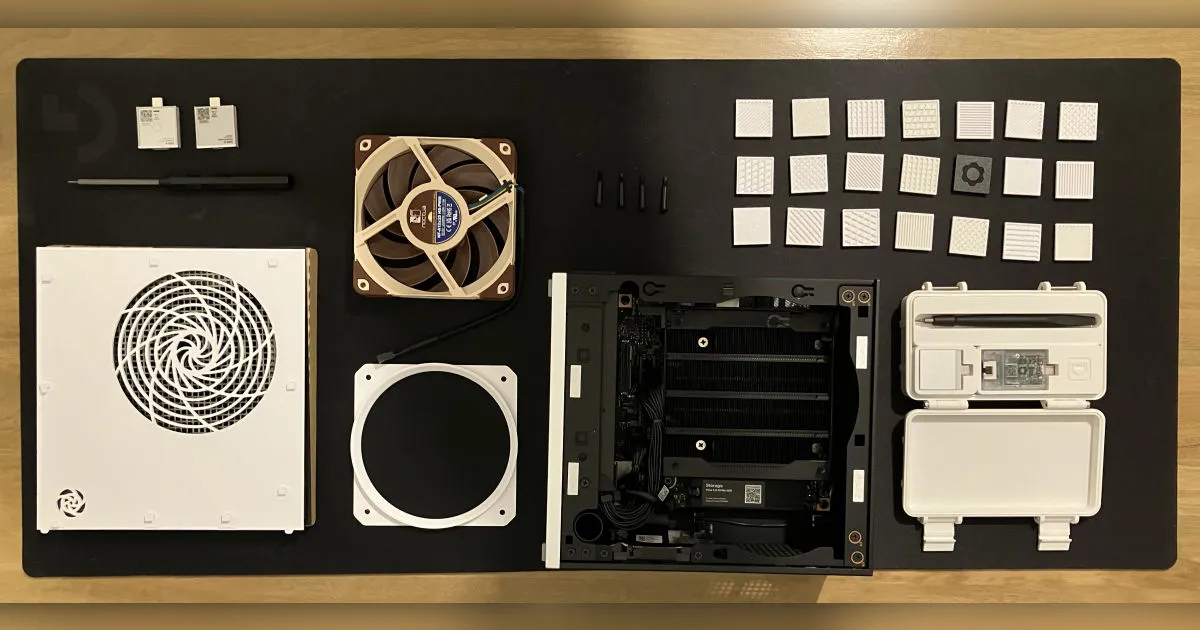

The new “local AI PC” wave (AMD Strix Halo / Ryzen AI Max+) is genuinely impressive. These machines ship with unified memory up to 128GB, and AMD even lets you carve out up to ~96GB of that as VRAM. That’s the sales pitch: a big shared memory for local LLMs.

But here’s the catch: 128GB is the ceiling the market has standardized around. So even if these chips get faster at inference over time, they will never load models that require more than that.

And most flagship dense models are way beyond that footprint. Even GPT-OSS-120B needs ~236GB in FP16 and stays in “barely local” territory only thanks to MoE sparsity and quantization tricks.

Compute and bandwidth matter too, obviously, Strix Halo bandwidth is ~256–275 GB/s, which is great for an APU but still far below serious datacenter GPUs.

But even before compute becomes the limiting factor, memory already blocks the road.

So local machines are literally designed for small/medium models, not frontier ones.

3. Medium models are cool… but not frontier-equivalent

Medium models today are good. Sometimes very good. On certain tasks, with the right prompting or retrieval, they shine.

But if you use frontier models daily, the difference is obvious:

- reasoning depth

- consistency across long tasks

- ability to improvise or recover when things get messy

- fewer dumb failures on “normal” requests

Medium models feel like you’re constantly working around limits. For narrow workflows, that’s fine. For general everyday intelligence, it’s not yet the same league.

And since I’m looking at the future through today’s lens: local compute is not enough yet for generic AI behavior, unless you accept a noticeable downgrade.

4. Cloud flagships are cheap right now (and that distorts everything)

Another reason local isn’t compelling today is cost.

Frontier models on the cloud are artificially inexpensive relative to what it would cost to replicate their capability on-prem. Because:

- the whole industry is flooded in VC capital

- big players (Google, etc.) can subsidize compute to gain market share

- prices are strategic, not “true cost”

So from a user point of view: Why spend thousands on hardware to run a medium model locally, when you can rent a frontier model or a dedicated GPU for peanuts?

That doesn’t mean cloud will stay this cheap forever, nobody knows, but it explains why local feels like a not-so-good trade right now.

5. Why I still care about local: sovereignty

Even if local isn’t ready for general AI today, I care about it for one main reason: sovereignty.

A lot of organizations cannot send data to someone else’s servers. Period.

- sensitive client data

- strategic internal documents

- regulated or confidential workflows

For them, “just use the cloud” is not an option. Local AI is the only credible long-term direction for those cases.

And beyond enterprises, I also think individuals owning their compute matters, because you don’t want core cognitive tools to be permanently rented at someone else’s mercy.

Bottom line

Local AI isn’t a now-thing for frontier-level general intelligence. Hardware is capping out at ~128GB unified memory, which locks local machines into the medium tier. Medium models are impressive but clearly different daily experience as flagships.

Cloud flagships are inexpensive because of subsidy and strategy, making local economically irrational today.

Local still matters long-term for sovereignty and ownership. So yeah: I hope local AI will be huge, it’s just not the case yet.

Valérian de Thézan de Gaussan

Valérian de Thézan de Gaussan